This is a small demonstration of a project I’ve been working on. Mainly meant to showcase it to interviewers or clients :)

Login Screen. Image Credits

Index

Context

Issues with Paid APIs

- Most algotrading APIs are quite restrictive. You can only call them a few times per minute. We can fetch prices of multiple scripts at once at the cost of making our code complex. Your classes and functions would start needing an additional higher order iterator to deal with a single class/function acting on one security.

- Real time prices -> via blocking web sockets (one thread or process will need to be dedicated for this). Historical prices via REST API. This undeniably increases code complexity.

- Limits on the number of web socket connections -> Each algo of yours would run as a separate process. Brokers limit the number of web socket connections to 3 or 5. This stops us from running more processes.

- Difficult to make it system agnostic (centralise using the cloud) given that in most cases we need our own custom handlers apart from the paid APIs.

- Restrictive clients provided by service providers.

- Need to restart if a configuration change takes place.

- Difficult to parallelise.

- Difficult to use multiple data providers.

Objectives

- abstract away all the above mentioned complexities.

- cater to a much larger scale by relying on in-house infrastructure.

- smarter and faster client side code to better handle faults.

- one unified system to handle data from different service providers.

- reduce data provider cost.

Features

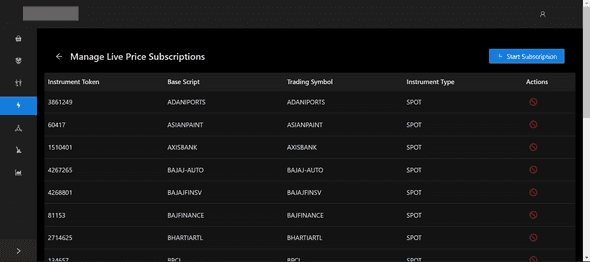

Distributed Web Sockets

- Separate group of processes exposed over an API.

- Go Lang is used to fetch real time prices over web sockets.

- Number of workers can be specified. For example you can ask it to get real time prices of 100 scripts using 3 workers. It would evenly split the load across 3 workers.

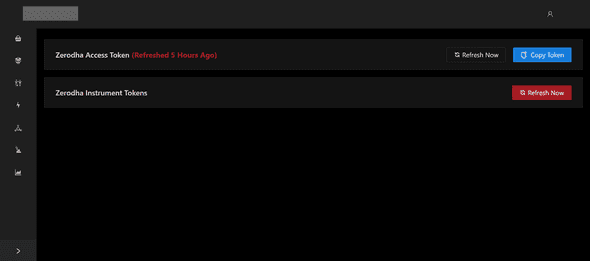

- Can be controlled using a REST API. New subscriptions can be added, existing instruments can be stopped without restarting the application.

- No disruptions because a restart isn’t needed.

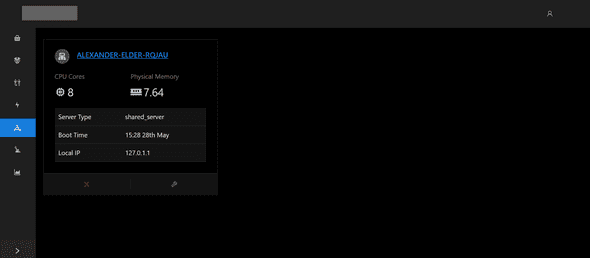

Run Everywhere, Control from Anywhere

- One can register any number of computers (laptops, EC2 instances, Docker containers) by running a small custom processmanager on it.

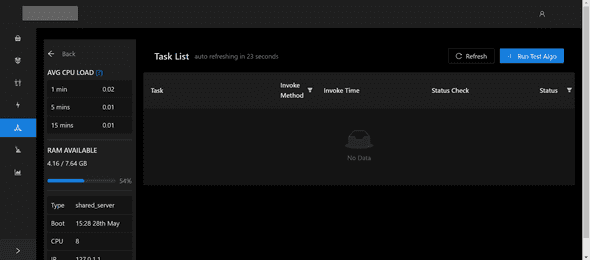

Quick Statistics of All Compute Running Algos

- It can then be further expanded to get some more system statistics. You can also submit jobs/algos to execute on them.

Start and Stop Individual Tasks on Each Computer

- This makes it really convinient to start and stop algos without having to SSH into servers, logging into your laptop. One can login from anywhere and keep track of various on-going tasks.

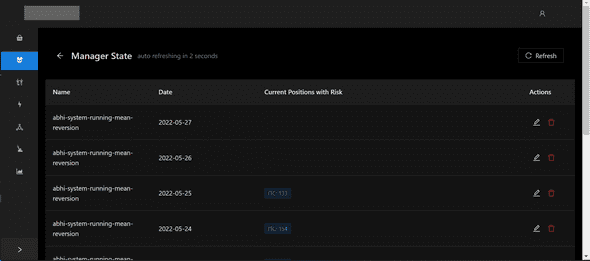

Centralised Risk Management

- Risk management should be centralised. The various algos running should communicate with each other and take informed decisions.

- One can observe max risk change everyday and spot trends.

Money at Risk by Each Algo Segregated by Stock

- Dashboard to tune risk and drawdown.

Unified Interface

- Sensible intuitive APIs. One API for both real time and historical prices using real time workers. Makes writing programs a lot easier.

- Can easily be expanded to support multiple data providers using bridges and adapters.

- Extendienble normalised data models for the same.

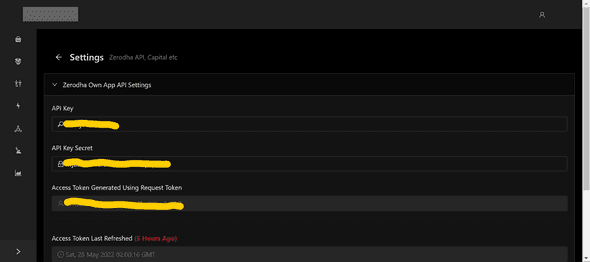

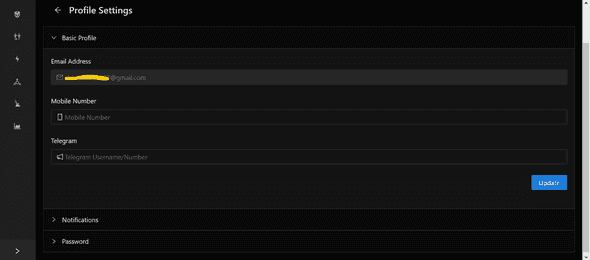

Ease of Access

Shortcuts on the Home Page to Perform Everyday Actions

Tech Stack

-

Languages Used: Go, Python, Node.js

-

Databases Used: Cassandra, PostgreSQL, MongoDB, Redis

-

Libraries and Frameworks

- Python: Flask, numpy, pandas, APScheduler, multiprocessing

- Node.js: React, Ant UI

- Go: Go Gin, KiteConnect, gabs

-

Quite a few places required intricate parallel or concurrent code with coordination using locks, semaphores and databases.

-

Data cleaning, finding the optimal update periods to reduce intervals of stale data and network cost.

-

Complex functional and object oriented paradigms for client algo classes.